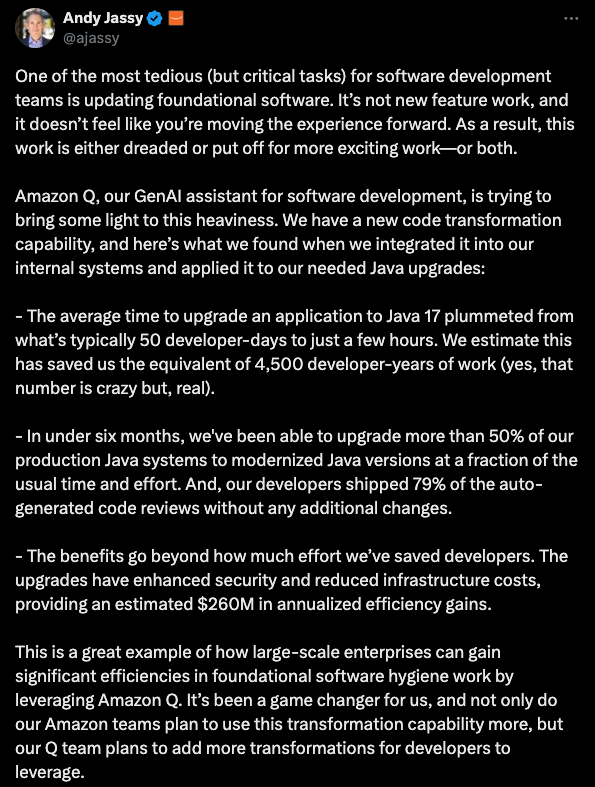

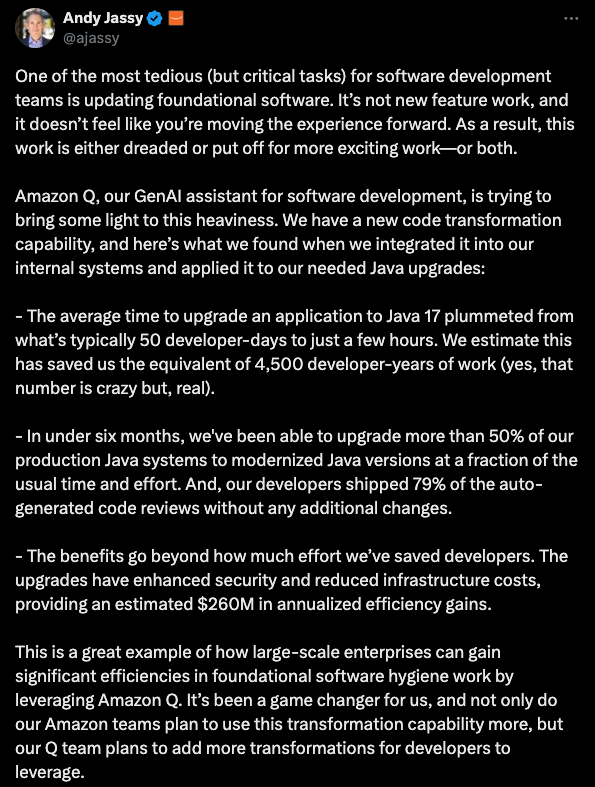

Recently, I came across a post that caught my attention. It claimed extraordinary time and cost savings achieved through Amazon’s AI coding assistant, Q, for a Java upgrade project. The post described upgrading from Java 11 to 17, with results that seemed almost too good to be true:

I see a LOT of companies claiming to have “automagical” solutions and the reality is they don’t talk about the amount of work behind the scenes to get these automagical solutions to actually do what they say it will do.

I see a LOT of companies claiming to have “automagical” solutions and the reality is they don’t talk about the amount of work behind the scenes to get these automagical solutions to actually do what they say it will do.

The Claims

- Upgrading a single Java application from version 11 to 17 was reduced from 50 days to just a few hours using Amazon Q.

- The company saved 4,500 developer years of work.

- The upgrade to Java 17 reportedly saved $260 million through improved efficiency and security.

- They fail to publish any strategies to accomplish this and how long it took to set up training data (and what type of training data they used and the overall approach – did they upgrade from 11->12, 12->13 or was it a straight shot 11-17.)

- They fail to discuss the testing infrastructure in place for this project. Most companies of this size and scale have fully automated testing suites.

My Analysis and Counterpoints

While these claims are attention-grabbing, I believe it’s important to approach them with a healthy dose of skepticism. Here are some key factors I think we need to consider:1. The Hidden Costs of AI Implementation

What I find notably absent from this success story is the time and resources invested in setting up Amazon Q for this specific use case. From my perspective, it’s highly unlikely that this was a simple “plug-and-play” solution. I suspect considerable effort likely went into:- Customizing Amazon Q for the specific codebase and upgrade requirements

- Training the AI on the company’s coding standards and practices

- Developing custom workflows and integrations

2. The Reality of AI Coding Assistants

As someone who has personally tested Amazon Q and uses other AI coding assistants like Claude, I can attest that the out-of-the-box experience with these tools often falls short of the hype. In my testing, I found Amazon Q, in particular, has limitations in its current form:- The chatbot functionality was a huge disappointment. It can’t generate code, it creates “strategies for coding”.

- The plugin to autocomplete code is similar to existing tools like GitHub Copilot

- I found the documentation and setup challenging, It took me over an hour to figure out how to set this up. The documentation was not clear and there were many setup steps that weren’t intuitive.

- Skilled developers who understand how to effectively prompt and guide the AI

- A solid understanding of the codebase and upgrade requirements

- Careful review and testing of AI-generated code

4. The Power of Targeted AI Assistance

Despite these caveats, I do recognize the immense potential of AI in software development. In fact, I’ve been leveraging AI to create Python automation scripts for over a year now, and my experience has been eye-opening. I’ve been using Claude.ai to build code from scratch, with remarkable results. What I’ve discovered is that the key to success lies in how you structure your instructions to the AI. I’ve developed a method where I provide:- A clear schema for the desired output

- Code examples that illustrate the expected result

- Detailed UX guidance and rules for the script’s behavior

- Keep the scope narrow and well-defined for each coding task

- Provide clear context, requirements, and examples

- Iterate and refine the prompts based on the AI’s output

- Review and test the generated code thoroughly

3. My Experience with Amazon Q’s Limitations

While my experience with Claude.ai has been AMAZING, I’ve found that not all AI coding assistants are created equal. To illustrate some of the current limitations of Amazon Q, let me share this disappointing response I received when asking it to perform a task similar to what I’ve been successfully doing with Claude: